前置知识

- C语言基础及其指针

- 数据结构与算法基础

- 汇编基础

- FreeRTOS以下简称OS

trace前缀开头的是用来调试追溯的

OS的任务创建分析

- 在OS中任务创建中有两个函数可以创建OS任务,以下是两个函数的简单介绍

xTaskCreate:动态分配一个大小为sizeof(TCB)的内存块,包括栈等,存放TCB数据xTaskCreateStatic:通过用户自己定义TCB内存块,包括栈,并且必须是持续存在的不能在函数栈里声明

TCB结构体解析

- 每个任务创建后都会有一个TCB结构的数据

- TCB(Task Control Block),用于存放任务的参数,包含栈地址、栈顶指针、优先级、状态链表和事件链表

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84typedef struct tskTaskControlBlock

{

volatile StackType_t * pxTopOfStack; // 此参数适中指向任务的(TCB)栈顶,并且必须放到当前结构体(TCB)的第一个位置,因为后面汇编会通过偏移直接访问

xMPU_SETTINGS xMPUSettings; // 存放MPU的相关设置

UBaseType_t uxCoreAffinityMask; // 在多核系统中,用于指定任务可以运行在哪个核心上,通过掩码的方式

ListItem_t xStateListItem; // 任务的状态链表结点,用于将任务挂载到任务列表中,根据不同的优先级挂载到不同的索引下, 若任务延时后此结点值存放延时Tick

ListItem_t xEventListItem; // 事件链表结点

UBaseType_t uxPriority; // 任务的优先级,0为最低优先级

StackType_t * pxStack; // 任务栈的地址,始终指向栈底(栈的开始)

volatile BaseType_t xTaskRunState; /**< Used to identify the core the task is running on, if the task is running. Otherwise, identifies the task's state - not running or yielding. */

UBaseType_t uxTaskAttributes; /**< Task's attributes - currently used to identify the idle tasks. */

char pcTaskName[ configMAX_TASK_NAME_LEN ]; // 存放任务的名称,最大长度为16

BaseType_t xPreemptionDisable; // 当OS配置为抢占时,禁止任务被被抢占。

StackType_t * pxEndOfStack; // 指向任务最高合法的地址

UBaseType_t uxCriticalNesting; // 用于记录临界区的嵌套深度,每当进入一个临界区此值递增若退出递减

UBaseType_t uxTCBNumber; // 存放了一个递增的数值,每次任务创建此值自动递增,用于调试器识别任务是否被删除或者重建

UBaseType_t uxTaskNumber; // 存放了一个数字,用于第三方工具调试追踪

UBaseType_t uxBasePriority; // 上一次为该任务分配的优先级,用于互斥锁继承机制

UBaseType_t uxMutexesHeld; // 任务当前持有的互斥锁数量

TaskHookFunction_t pxTaskTag;

void * pvThreadLocalStoragePointers[ configNUM_THREAD_LOCAL_STORAGE_POINTERS ];

configRUN_TIME_COUNTER_TYPE ulRunTimeCounter; /**< Stores the amount of time the task has spent in the Running state. */

configTLS_BLOCK_TYPE xTLSBlock; /**< Memory block used as Thread Local Storage (TLS) Block for the task. */

// 每个OS任务都有一个任务通知数组。每个任务都有“挂起”或“非挂起”的通知状态和一个32位的通知值

volatile uint32_t ulNotifiedValue[ configTASK_NOTIFICATION_ARRAY_ENTRIES ]; // 任务通知值

volatile uint8_t ucNotifyState[ configTASK_NOTIFICATION_ARRAY_ENTRIES ]; // 任务通知状态

/* See the comments in FreeRTOS.h with the definition of

* tskSTATIC_AND_DYNAMIC_ALLOCATION_POSSIBLE. */

uint8_t ucStaticallyAllocated; // 如果任务是通过静态方法创建的则为pdTRUE,确保不会尝试释放当前任务

uint8_t ucDelayAborted; // 标记任务的延迟状态是否被中断(如通过 `xTaskAbortDelay()` 强制唤醒任务)

int iTaskErrno;

} tskTCB;

- TCB(Task Control Block),用于存放任务的参数,包含栈地址、栈顶指针、优先级、状态链表和事件链表

xTaskCreate动态创建任务

- 该函数用于动态创建一个OS任务,以下是主要的参数说明

- pxTaskCode:存放任务函数的地址

- pcName:任务的名称

- uxStackDepth:栈深度,即栈的大小

- pvParameters:任务参数

- uxPriority:任务的优先级

- pxCreatedTask:用于接收任务句柄

- 以下是该函数的解析

- 声明了一个TCB指针用于存放待会创建后的TCB数据

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69BaseType_t xTaskCreate( TaskFunction_t pxTaskCode,

const char * const pcName,

const configSTACK_DEPTH_TYPE uxStackDepth,

void * const pvParameters,

UBaseType_t uxPriority,

TaskHandle_t * const pxCreatedTask )

{

TCB_t * pxNewTCB;

BaseType_t xReturn;

traceENTER_xTaskCreate( pxTaskCode, pcName, uxStackDepth, pvParameters, uxPriority, pxCreatedTask );

pxNewTCB = prvCreateTask( pxTaskCode, pcName, uxStackDepth, pvParameters, uxPriority, pxCreatedTask ); // 调用`prvCreateTask`创建任务,并将参数传递

if( pxNewTCB != NULL ) // 判断任务是否创建成功

{

{

/* Set the task's affinity before scheduling it. */

// 设置任务的所使用的核心,默认则是使用所有核心

pxNewTCB->uxCoreAffinityMask = configTASK_DEFAULT_CORE_AFFINITY;

}

prvAddNewTaskToReadyList( pxNewTCB ); // 调用`prvAddNewTaskToReadyList`将创建的任务添加到就绪列表,待调度器进行调度

xReturn = pdPASS;

}

else // 创建失败

{

xReturn = errCOULD_NOT_ALLOCATE_REQUIRED_MEMORY; // 返回内存不足

}

traceRETURN_xTaskCreate( xReturn );

return xReturn; // 返回任务创建状态码,若TCB创建成功则返回1表示成功,否则为-1表示内存不足以存放TCB数据

}

prvCreateTask创建任务核心

- 若开启了动态创建任务则使用函数,否则编译器将其代码优化以用于裁剪压缩

- 根据栈的增长方向的不同,进行任务栈和TCB内存块的分配。

- 若分配失败,则回收内存防止OOM(Out Of Memory)

- 若分配成功,调用任务初始化函数对任务进行初始化操作

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

static TCB_t * prvCreateTask( TaskFunction_t pxTaskCode,

const char * const pcName,

const configSTACK_DEPTH_TYPE uxStackDepth,

void * const pvParameters,

UBaseType_t uxPriority,

TaskHandle_t * const pxCreatedTask )

{

TCB_t * pxNewTCB;

/* If the stack grows down then allocate the stack then the TCB so the stack

* does not grow into the TCB. Likewise if the stack grows up then allocate

* the TCB then the stack.

首先判断栈的增长方向,若大于0则向上增长,小于0为向下增长

若栈为向上增长,先分配TCB内存块再分配任务栈内存块,栈帧模型如下

低地址 → +-----------------+

| TCB | ← 先分配,在低地址侧

+-----------------+

| 栈 | ← 后分配,栈从这里开始,向高地址生长

高地址 → +-----------------+

此时若栈溢出,栈会往高地址增加,不会影响核心数据TCB

若栈为向下增长时,先分配栈内存块再分配TCB内存块,栈帧模型如下

高地址 → +-----------------+

| 栈 | ← 栈从这里开始,向低地址生长

+-----------------+

| TCB | ← 后分配,在栈的低地址侧

低地址 → +-----------------+

TCB在栈的下方,即TCB地址比栈低。若此时发生了栈溢出会影响到TCB的数据,但OS内部有栈溢出检测

*/

{

/* Allocate space for the TCB. Where the memory comes from depends on

* the implementation of the port malloc function and whether or not static

* allocation is being used. */

/* MISRA Ref 11.5.1 [Malloc memory assignment] */

/* More details at: https://github.com/FreeRTOS/FreeRTOS-Kernel/blob/main/MISRA.md#rule-115 */

/* coverity[misra_c_2012_rule_11_5_violation] */

// 分配内存块用于存放TCB

pxNewTCB = ( TCB_t * ) pvPortMalloc( sizeof( TCB_t ) );

if( pxNewTCB != NULL ) // 若为NULL则内存分配失败,反之

{

( void ) memset( ( void * ) pxNewTCB, 0x00, sizeof( TCB_t ) );

/* Allocate space for the stack used by the task being created.

* The base of the stack memory stored in the TCB so the task can

* be deleted later if required. */

/* MISRA Ref 11.5.1 [Malloc memory assignment] */

/* More details at: https://github.com/FreeRTOS/FreeRTOS-Kernel/blob/main/MISRA.md#rule-115 */

/* coverity[misra_c_2012_rule_11_5_violation] */

pxNewTCB->pxStack = ( StackType_t * ) pvPortMallocStack( ( ( ( size_t ) uxStackDepth ) * sizeof( StackType_t ) ) );

if( pxNewTCB->pxStack == NULL ) // 同上

{

/* Could not allocate the stack. Delete the allocated TCB. */

vPortFree( pxNewTCB ); // pxNewTCB->pxStack为NULL则说明内存分配失败,回收内存防止OOM

pxNewTCB = NULL;

}

}

}

{

StackType_t * pxStack;

/* Allocate space for the stack used by the task being created. */

/* MISRA Ref 11.5.1 [Malloc memory assignment] */

/* More details at: https://github.com/FreeRTOS/FreeRTOS-Kernel/blob/main/MISRA.md#rule-115 */

/* coverity[misra_c_2012_rule_11_5_violation] */

pxStack = pvPortMallocStack( ( ( ( size_t ) uxStackDepth ) * sizeof( StackType_t ) ) );

if( pxStack != NULL )

{

/* Allocate space for the TCB. */

/* MISRA Ref 11.5.1 [Malloc memory assignment] */

/* More details at: https://github.com/FreeRTOS/FreeRTOS-Kernel/blob/main/MISRA.md#rule-115 */

/* coverity[misra_c_2012_rule_11_5_violation] */

pxNewTCB = ( TCB_t * ) pvPortMalloc( sizeof( TCB_t ) );

if( pxNewTCB != NULL )

{

( void ) memset( ( void * ) pxNewTCB, 0x00, sizeof( TCB_t ) );

/* Store the stack location in the TCB. */

pxNewTCB->pxStack = pxStack;

}

else

{

/* The stack cannot be used as the TCB was not created. Free

* it again. */

vPortFreeStack( pxStack ); // pxNewTCB为NULL则说明内存分配失败,释放掉TCB和任务栈内存块

}

}

else

{

pxNewTCB = NULL;

}

}

if( pxNewTCB != NULL )

{

{

/* Tasks can be created statically or dynamically, so note this

* task was created dynamically in case it is later deleted. */

pxNewTCB->ucStaticallyAllocated = tskDYNAMICALLY_ALLOCATED_STACK_AND_TCB; // 设置任务静态标志为0表示动态分配的TCB和栈内存块

}

prvInitialiseNewTask( pxTaskCode, pcName, uxStackDepth, pvParameters, uxPriority, pxCreatedTask, pxNewTCB, NULL ); // 调用`prvInitialiseNewTask`初始化任务

}

return pxNewTCB;

}

prvInitialiseNewTask任务初始化

- 首先判断portUSING_MPU_WRAPPERS(内存保护单元)是否为1,即开启了内存保护单元

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440static void prvInitialiseNewTask( TaskFunction_t pxTaskCode,

const char * const pcName,

const configSTACK_DEPTH_TYPE uxStackDepth,

void * const pvParameters,

UBaseType_t uxPriority,

TaskHandle_t * const pxCreatedTask,

TCB_t * pxNewTCB,

const MemoryRegion_t * const xRegions )

{

StackType_t * pxTopOfStack;

UBaseType_t x;

/* Should the task be created in privileged mode? */

BaseType_t xRunPrivileged;

// 若开启了MPU,判断其任务是否需要运行在特权模式,当运行在特权模式xRunPrivileged为pdTRUE,否则为pdFALSE

if( ( uxPriority & portPRIVILEGE_BIT ) != 0U )

{

xRunPrivileged = pdTRUE;

}

else

{

xRunPrivileged = pdFALSE;

}

uxPriority &= ~portPRIVILEGE_BIT; // 清除最高位特权模式标志位,用于获取任务实际的优先级

// 判断是否提供了memset依赖

{

/* Fill the stack with a known value to assist debugging. */

( void ) memset( pxNewTCB->pxStack, ( int ) tskSTACK_FILL_BYTE, ( size_t ) uxStackDepth * sizeof( StackType_t ) ); // 初始化内存块数据

}

/* Calculate the top of stack address. This depends on whether the stack

* grows from high memory to low (as per the 80x86) or vice versa.

* portSTACK_GROWTH is used to make the result positive or negative as required

* by the port.

计算栈顶地址,需要根据栈的增长方向进行不同的操作

*/

{

pxTopOfStack = &( pxNewTCB->pxStack[ uxStackDepth - ( configSTACK_DEPTH_TYPE ) 1 ] ); // 设置栈的最后一个元素为栈顶

pxTopOfStack = ( StackType_t * ) ( ( ( portPOINTER_SIZE_TYPE ) pxTopOfStack ) & ( ~( ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) ) ); // 对栈地址进行字节对齐,字节对齐大小根据不同的平台架构设定,具体根据硬件的数据总线的数据宽度设定。假设使用的是Cortex-M3架构则需要进行4字节对齐,没对齐前的栈地址是0x20000006,对齐后0x20000004

/* Check the alignment of the calculated top of stack is correct. */

configASSERT( ( ( ( portPOINTER_SIZE_TYPE ) pxTopOfStack & ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) == 0U ) ); // 对对齐后的栈地址进行检测断言

// 是否启用了记录栈底地址,若启用了则设置pxEndOfStack

{

/* Also record the stack's high address, which may assist

* debugging. */

pxNewTCB->pxEndOfStack = pxTopOfStack;

}

}

{

pxTopOfStack = pxNewTCB->pxStack; // 栈顶即为栈的首地址

pxTopOfStack = ( StackType_t * ) ( ( ( ( portPOINTER_SIZE_TYPE ) pxTopOfStack ) + portBYTE_ALIGNMENT_MASK ) & ( ~( ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) ) ); // 栈地址字节对齐

/* Check the alignment of the calculated top of stack is correct. */

configASSERT( ( ( ( portPOINTER_SIZE_TYPE ) pxTopOfStack & ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) == 0U ) );

/* The other extreme of the stack space is required if stack checking is

* performed. */

pxNewTCB->pxEndOfStack = pxNewTCB->pxStack + ( uxStackDepth - ( configSTACK_DEPTH_TYPE ) 1 ); // 记录栈底

}

/* Store the task name in the TCB. */

if( pcName != NULL ) // 任务是否有名称

{

for( x = ( UBaseType_t ) 0; x < ( UBaseType_t ) configMAX_TASK_NAME_LEN; x++ ) // 将名称从临时内存复制到TCB内存块里

{

pxNewTCB->pcTaskName[ x ] = pcName[ x ];

/* Don't copy all configMAX_TASK_NAME_LEN if the string is shorter than

* configMAX_TASK_NAME_LEN characters just in case the memory after the

* string is not accessible (extremely unlikely). */

if( pcName[ x ] == ( char ) 0x00 )

{

break;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

/* Ensure the name string is terminated in the case that the string length

* was greater or equal to configMAX_TASK_NAME_LEN. */

pxNewTCB->pcTaskName[ configMAX_TASK_NAME_LEN - 1U ] = '\0';

}

else

{

mtCOVERAGE_TEST_MARKER();

}

/* This is used as an array index so must ensure it's not too large. */

configASSERT( uxPriority < configMAX_PRIORITIES ); // 优先级断言,若优先级超过最大优先级则断言

if( uxPriority >= ( UBaseType_t ) configMAX_PRIORITIES ) // 若超过了最高优先级

{

uxPriority = ( UBaseType_t ) configMAX_PRIORITIES - ( UBaseType_t ) 1U; // 设置优先级为最高优先级

}

else

{

mtCOVERAGE_TEST_MARKER();

}

pxNewTCB->uxPriority = uxPriority; // 将优先级记录到结构体里

{

pxNewTCB->uxBasePriority = uxPriority; // 是否使用了互斥锁,保留原有的优先级用于互斥锁继承机制

}

vListInitialiseItem( &( pxNewTCB->xStateListItem ) ); // 初始化任务状态链表结点

vListInitialiseItem( &( pxNewTCB->xEventListItem ) ); // 初始化事件状态链表结点

/* Set the pxNewTCB as a link back from the ListItem_t. This is so we can get

* back to the containing TCB from a generic item in a list. */

listSET_LIST_ITEM_OWNER( &( pxNewTCB->xStateListItem ), pxNewTCB ); // 设置链表结点的拥有者为pxNewTCB

/* Event lists are always in priority order. */

listSET_LIST_ITEM_VALUE( &( pxNewTCB->xEventListItem ), ( TickType_t ) configMAX_PRIORITIES - ( TickType_t ) uxPriority ); // 事件节点值为最高优先级减除当前的优先级,对其反向映射,越高的优先级此值越小

listSET_LIST_ITEM_OWNER( &( pxNewTCB->xEventListItem ), pxNewTCB ); // 设置链表结点的拥有者为pxNewTCB

{

vPortStoreTaskMPUSettings( &( pxNewTCB->xMPUSettings ), xRegions, pxNewTCB->pxStack, uxStackDepth ); // 若启用了MPU,设置允许访问的内存区域

}

{

/* Avoid compiler warning about unreferenced parameter. */

( void ) xRegions;

}

{

/* Allocate and initialize memory for the task's TLS Block. */

configINIT_TLS_BLOCK( pxNewTCB->xTLSBlock, pxTopOfStack ); // 设置TLS块

}

/* Initialize the TCB stack to look as if the task was already running,

* but had been interrupted by the scheduler. The return address is set

* to the start of the task function. Once the stack has been initialised

* the top of stack variable is updated. */

{

/* If the port has capability to detect stack overflow,

* pass the stack end address to the stack initialization

* function as well. */

{

{

pxNewTCB->pxTopOfStack = pxPortInitialiseStack( pxTopOfStack, pxNewTCB->pxStack, pxTaskCode, pvParameters, xRunPrivileged, &( pxNewTCB->xMPUSettings ) );

}

{

pxNewTCB->pxTopOfStack = pxPortInitialiseStack( pxTopOfStack, pxNewTCB->pxEndOfStack, pxTaskCode, pvParameters, xRunPrivileged, &( pxNewTCB->xMPUSettings ) );

}

}

{

pxNewTCB->pxTopOfStack = pxPortInitialiseStack( pxTopOfStack, pxTaskCode, pvParameters, xRunPrivileged, &( pxNewTCB->xMPUSettings ) );

}

}

{

/* If the port has capability to detect stack overflow,

* pass the stack end address to the stack initialization

* function as well. */

{

{

pxNewTCB->pxTopOfStack = pxPortInitialiseStack( pxTopOfStack, pxNewTCB->pxStack, pxTaskCode, pvParameters );

}

{

pxNewTCB->pxTopOfStack = pxPortInitialiseStack( pxTopOfStack, pxNewTCB->pxEndOfStack, pxTaskCode, pvParameters );

}

}

{

pxNewTCB->pxTopOfStack = pxPortInitialiseStack( pxTopOfStack, pxTaskCode, pvParameters ); // 初始化任务的堆栈

}

}

/* Initialize task state and task attributes. */

{

pxNewTCB->xTaskRunState = taskTASK_NOT_RUNNING; // 让任务没运行在任何核心上

/* Is this an idle task? */

if( ( ( TaskFunction_t ) pxTaskCode == ( TaskFunction_t ) prvIdleTask ) || ( ( TaskFunction_t ) pxTaskCode == ( TaskFunction_t ) prvPassiveIdleTask ) ) // 是否是空闲任务, 如果是设置任务空闲属性标志位

{

pxNewTCB->uxTaskAttributes |= taskATTRIBUTE_IS_IDLE;

}

}

if( pxCreatedTask != NULL )

{

/* Pass the handle out in an anonymous way. The handle can be used to

* change the created task's priority, delete the created task, etc.*/

*pxCreatedTask = ( TaskHandle_t ) pxNewTCB; // 任务句柄

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

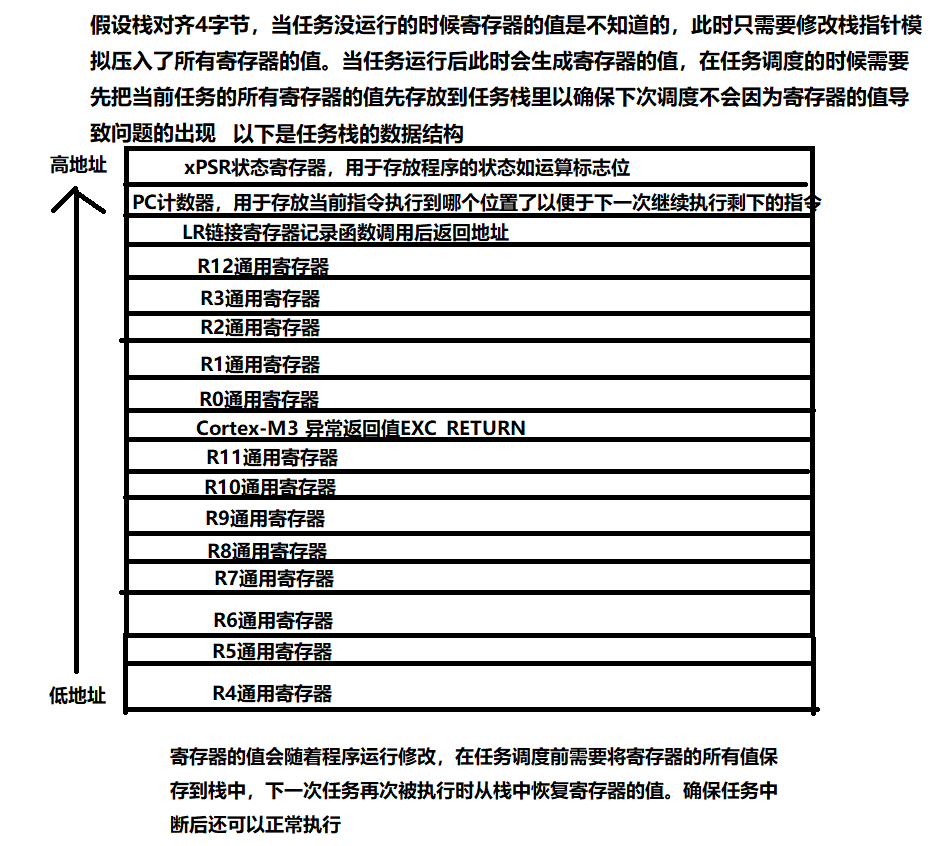

pxPortInitialiseStack初始化栈

- 此函数是任务的核心,用于初始化堆栈模拟任务已经被执行了但被调度器中断了

- 以下是GCC ARM_CM4F的函数分析,不同的架构有不同的函数,但其内容大同小异

- 压入了部分数据,并分配了部分栈内存用于存放任务的寄存器数据,供调度器下次调度的时候恢复寄存器确保任务的正常调度

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65/*

* See header file for description.

*/

StackType_t * pxPortInitialiseStack( StackType_t * pxTopOfStack,

TaskFunction_t pxCode,

void * pvParameters )

{

/* Simulate the stack frame as it would be created by a context switch

* interrupt. */

/* Offset added to account for the way the MCU uses the stack on entry/exit

* of interrupts, and to ensure alignment. */

pxTopOfStack--; // 模拟栈sp--

*pxTopOfStack = portINITIAL_XPSR; // 将数据压入栈 /* xPSR */

pxTopOfStack--;

*pxTopOfStack = ( ( StackType_t ) pxCode ) & portSTART_ADDRESS_MASK; // 程序计数器Program Counter /* PC */

pxTopOfStack--;

*pxTopOfStack = ( StackType_t ) portTASK_RETURN_ADDRESS; // Link Register /* LR */

/* Save code space by skipping register initialisation. */

pxTopOfStack -= 5; /* R12, R3, R2 and R1. */

*pxTopOfStack = ( StackType_t ) pvParameters; /* R0 */

/* A save method is being used that requires each task to maintain its

* own exec return value. */

pxTopOfStack--;

*pxTopOfStack = portINITIAL_EXC_RETURN;

pxTopOfStack -= 8; /* R11, R10, R9, R8, R7, R6, R5 and R4. */

return pxTopOfStack;

}

prvAddNewTaskToReadyList添加任务到就绪列表

- 此函数分为单核和多核两个,这里以单核为例

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149static void prvAddNewTaskToReadyList( TCB_t * pxNewTCB )

{

/* Ensure interrupts don't access the task lists while the lists are being

* updated. */

taskENTER_CRITICAL();

{

uxCurrentNumberOfTasks = ( UBaseType_t ) ( uxCurrentNumberOfTasks + 1U );

if( pxCurrentTCB == NULL ) // 当前TCB是否为空,为空则表示当前没有任何任务执行,目前的任务是第一个任务

{

/* There are no other tasks, or all the other tasks are in

* the suspended state - make this the current task. */

pxCurrentTCB = pxNewTCB; // 设置新建的任务的TCB为当前TCB

if( uxCurrentNumberOfTasks == ( UBaseType_t ) 1 )

{

/* This is the first task to be created so do the preliminary

* initialisation required. We will not recover if this call

* fails, but we will report the failure. */

prvInitialiseTaskLists(); // 若没有任务在列表里,则初始化任务列表

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

/* If the scheduler is not already running, make this task the

* current task if it is the highest priority task to be created

* so far. */

if( xSchedulerRunning == pdFALSE )

{

if( pxCurrentTCB->uxPriority <= pxNewTCB->uxPriority ) // 当前TCB存在表示当前有任务正在运行,若当前任务的优先级小于新任务则直接抢占将新任务设置成当前的任务

{

pxCurrentTCB = pxNewTCB;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

uxTaskNumber++; // 任务数递增

{

/* Add a counter into the TCB for tracing only. */

pxNewTCB->uxTCBNumber = uxTaskNumber;

}

traceTASK_CREATE( pxNewTCB );

prvAddTaskToReadyList( pxNewTCB ); // 插入任务到对应优先级的链表中

portSETUP_TCB( pxNewTCB );

}

taskEXIT_CRITICAL();

if( xSchedulerRunning != pdFALSE )

{

/* If the created task is of a higher priority than the current task

* then it should run now. */

taskYIELD_ANY_CORE_IF_USING_PREEMPTION( pxNewTCB ); // 如果当前任务优先级小于新任务优先级则立刻触发PendSV中断进行任务调度,让其立刻执行新任务

}

else

{

mtCOVERAGE_TEST_MARKER();

}

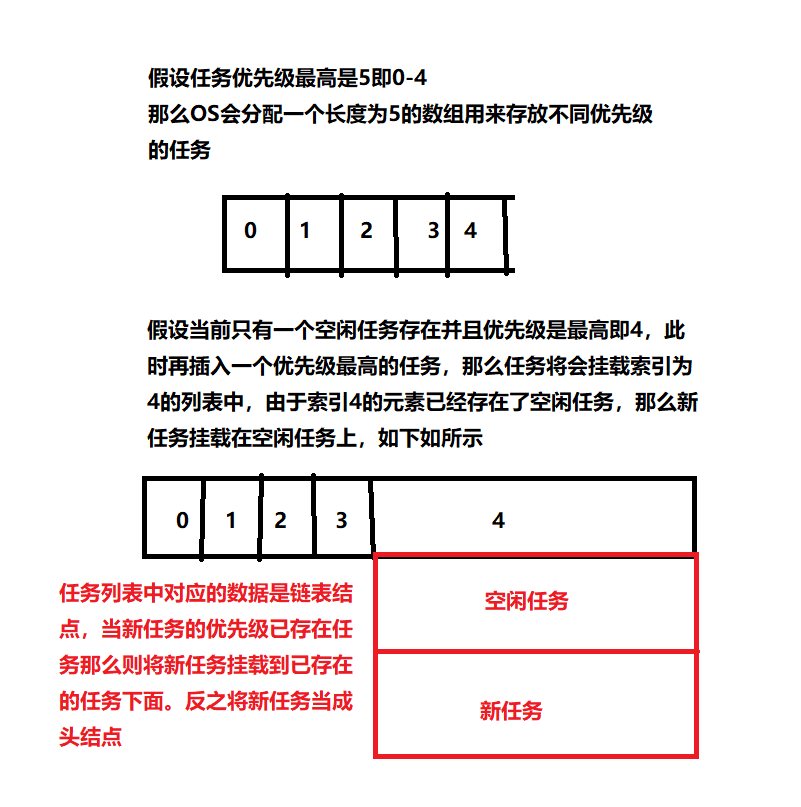

}prvAddTaskToReadyList插入任务到链表中 - 更新当前的最高优先级

- 插入TCB到对应优先级索引的链表中

1

2

3

4

5

6

7

8

9

10

11

12

13

do { \

traceMOVED_TASK_TO_READY_STATE( pxTCB ); \

taskRECORD_READY_PRIORITY( ( pxTCB )->uxPriority ); \

listINSERT_END( &( pxReadyTasksLists[ ( pxTCB )->uxPriority ] ), &( ( pxTCB )->xStateListItem ) ); \

tracePOST_MOVED_TASK_TO_READY_STATE( pxTCB ); \

} while( 0 )

任务调度分析

vTaskDelay任务延时

1 | void vTaskDelay( const TickType_t xTicksToDelay ) |

prvAddCurrentTaskToDelayedList添加自身到延时列表中

1 | static void prvAddCurrentTaskToDelayedList( TickType_t xTicksToWait, |

xPortSysTickHandlerOS心跳分析

- 此函数在移植OS的时候需要周期性调用以给予OS时钟用于分片进行任务调度

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49void xPortSysTickHandler( void )

{

/* The SysTick runs at the lowest interrupt priority, so when this interrupt

* executes all interrupts must be unmasked. There is therefore no need to

* save and then restore the interrupt mask value as its value is already

* known. */

portDISABLE_INTERRUPTS(); // 禁用所有中断,以防止操作被中断打断

traceISR_ENTER();

{

/* Increment the RTOS tick. */

if( xTaskIncrementTick() != pdFALSE ) // 调用函数`xTaskIncrementTick`对时钟Tick进行递增

{

traceISR_EXIT_TO_SCHEDULER();

/* A context switch is required. Context switching is performed in

* the PendSV interrupt. Pend the PendSV interrupt. */

portNVIC_INT_CTRL_REG = portNVIC_PENDSVSET_BIT;

}

else

{

traceISR_EXIT();

}

}

portENABLE_INTERRUPTS(); // 恢复中断接收来自外部或内部的中断

}

xTaskIncrementTick时钟核心

1 | BaseType_t xTaskIncrementTick( void ) |

xPortStartScheduler开始调度

- 移植OS时需要手动配置OS的Handler如

vPortSVCHandler等,那如何配置呢?是直接修改中断向量表指向OS的Handler还是在系统架构的Handler里再调用OS的Handler - 如果是修改中断向量表则进行断言Handler是否设置成功

- 读取架构的中断优先级,判断其中断优先级实际使用了多少位,如Cortex-M4使用的是4位作为中断优先级配置(0-15)

- 配置读取中断优先级分组,后续断言使用

- 配置

SHPR寄存器,系统异常中断优先级确保SVCHandler优先执行,SystickHandler和PendSVHandler优先级最低 - 开启VFP硬件浮点加速

- 开启调度第一个任务

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321BaseType_t xPortStartScheduler( void )

{

/* This port can be used on all revisions of the Cortex-M7 core other than

* the r0p1 parts. r0p1 parts should use the port from the

* /source/portable/GCC/ARM_CM7/r0p1 directory. */

configASSERT( portCPUID != portCORTEX_M7_r0p1_ID );

configASSERT( portCPUID != portCORTEX_M7_r0p0_ID ); // 断言

/* An application can install FreeRTOS interrupt handlers in one of the

* following ways:

* 1. Direct Routing - Install the functions vPortSVCHandler and

* xPortPendSVHandler for SVCall and PendSV interrupts respectively.

* 2. Indirect Routing - Install separate handlers for SVCall and PendSV

* interrupts and route program control from those handlers to

* vPortSVCHandler and xPortPendSVHandler functions.

*

* Applications that use Indirect Routing must set

* configCHECK_HANDLER_INSTALLATION to 0 in their FreeRTOSConfig.h. Direct

* routing, which is validated here when configCHECK_HANDLER_INSTALLATION

* is 1, should be preferred when possible. */

{

const portISR_t * const pxVectorTable = portSCB_VTOR_REG;

/* Validate that the application has correctly installed the FreeRTOS

* handlers for SVCall and PendSV interrupts. We do not check the

* installation of the SysTick handler because the application may

* choose to drive the RTOS tick using a timer other than the SysTick

* timer by overriding the weak function vPortSetupTimerInterrupt().

*

* Assertion failures here indicate incorrect installation of the

* FreeRTOS handlers. For help installing the FreeRTOS handlers, see

* https://www.freertos.org/Why-FreeRTOS/FAQs.

*

* Systems with a configurable address for the interrupt vector table

* can also encounter assertion failures or even system faults here if

* VTOR is not set correctly to point to the application's vector table. */

configASSERT( pxVectorTable[ portVECTOR_INDEX_SVC ] == vPortSVCHandler );

configASSERT( pxVectorTable[ portVECTOR_INDEX_PENDSV ] == xPortPendSVHandler ); // 若修改向量表,是否安装正确断言

}

{

volatile uint8_t ucOriginalPriority; // 原始优先级

volatile uint32_t ulImplementedPrioBits = 0;

volatile uint8_t * const pucFirstUserPriorityRegister = ( volatile uint8_t * const ) ( portNVIC_IP_REGISTERS_OFFSET_16 + portFIRST_USER_INTERRUPT_NUMBER );

volatile uint8_t ucMaxPriorityValue;

/* Determine the maximum priority from which ISR safe FreeRTOS API

* functions can be called. ISR safe functions are those that end in

* "FromISR". FreeRTOS maintains separate thread and ISR API functions to

* ensure interrupt entry is as fast and simple as possible.

*

* Save the interrupt priority value that is about to be clobbered. */

ucOriginalPriority = *pucFirstUserPriorityRegister; // 保留NVIC寄存器原始值

/* Determine the number of priority bits available. First write to all

* possible bits. */

*pucFirstUserPriorityRegister = portMAX_8_BIT_VALUE; // 写入0xFF到NVIC寄存器

/* Read the value back to see how many bits stuck. */

ucMaxPriorityValue = *pucFirstUserPriorityRegister; // 从寄存器再读出来,由于写的是0xFF,从Cortex-M4资料可知虽然写了0xFF但是由于处理器只使用了高四位,低四位读出来的是零并且屏蔽了写操作。那么此时读取出来的值是0xF0。根据这一特性得到处理器实际可以支持的中断位

/* Use the same mask on the maximum system call priority. */

ucMaxSysCallPriority = configMAX_SYSCALL_INTERRUPT_PRIORITY & ucMaxPriorityValue; // 确保用户设置的优先级不超过硬件支持的最大优先级

/* Check that the maximum system call priority is nonzero after

* accounting for the number of priority bits supported by the

* hardware. A priority of 0 is invalid because setting the BASEPRI

* register to 0 unmasks all interrupts, and interrupts with priority 0

* cannot be masked using BASEPRI.

* See https://www.FreeRTOS.org/RTOS-Cortex-M3-M4.html */

configASSERT( ucMaxSysCallPriority ); // 若为0则断言

/* Check that the bits not implemented in hardware are zero in

* configMAX_SYSCALL_INTERRUPT_PRIORITY. */

configASSERT( ( configMAX_SYSCALL_INTERRUPT_PRIORITY & ( ~ucMaxPriorityValue ) ) == 0U ); // 从Cortex-M4资料可知处理器只使用了高四位,若配置使用了低四位无效位则断言

/* Calculate the maximum acceptable priority group value for the number

* of bits read back. */

// 计算有效位数

while( ( ucMaxPriorityValue & portTOP_BIT_OF_BYTE ) == portTOP_BIT_OF_BYTE )

{

ulImplementedPrioBits++;

ucMaxPriorityValue <<= ( uint8_t ) 0x01;

}

// 若8位都有效

if( ulImplementedPrioBits == 8 )

{

/* When the hardware implements 8 priority bits, there is no way for

* the software to configure PRIGROUP to not have sub-priorities. As

* a result, the least significant bit is always used for sub-priority

* and there are 128 preemption priorities and 2 sub-priorities.

*

* This may cause some confusion in some cases - for example, if

* configMAX_SYSCALL_INTERRUPT_PRIORITY is set to 5, both 5 and 4

* priority interrupts will be masked in Critical Sections as those

* are at the same preemption priority. This may appear confusing as

* 4 is higher (numerically lower) priority than

* configMAX_SYSCALL_INTERRUPT_PRIORITY and therefore, should not

* have been masked. Instead, if we set configMAX_SYSCALL_INTERRUPT_PRIORITY

* to 4, this confusion does not happen and the behaviour remains the same.

*

* The following assert ensures that the sub-priority bit in the

* configMAX_SYSCALL_INTERRUPT_PRIORITY is clear to avoid the above mentioned

* confusion. */

configASSERT( ( configMAX_SYSCALL_INTERRUPT_PRIORITY & 0x1U ) == 0U );

ulMaxPRIGROUPValue = 0; // 子优先级有效位为0

}

else

{

ulMaxPRIGROUPValue = portMAX_PRIGROUP_BITS - ulImplementedPrioBits; // 子优先级的有效位为7-ulImplementedPrioBits,若ulImplementedPrioBits=4则有效位为7-4=3

}

/* Shift the priority group value back to its position within the AIRCR

* register. */

ulMaxPRIGROUPValue <<= portPRIGROUP_SHIFT; // 移动到AIRCR寄存器的第8位

ulMaxPRIGROUPValue &= portPRIORITY_GROUP_MASK; // AIRCR 优先级分组的掩码

/* Restore the clobbered interrupt priority register to its original

* value. */

*pucFirstUserPriorityRegister = ucOriginalPriority; // 还原原始优先级

}

/* Make PendSV and SysTick the lowest priority interrupts, and make SVCall

* the highest priority. */

// PendSV 和 SysTick Handler优先级为最低,SVCall优先级为最高

portNVIC_SHPR3_REG |= portNVIC_PENDSV_PRI;

portNVIC_SHPR3_REG |= portNVIC_SYSTICK_PRI;

portNVIC_SHPR2_REG = 0;

/* Start the timer that generates the tick ISR. Interrupts are disabled

* here already. */

vPortSetupTimerInterrupt(); // 初始化Systick滴答计时器

/* Initialise the critical nesting count ready for the first task. */

uxCriticalNesting = 0; // 中断嵌套层数

/* Ensure the VFP is enabled - it should be anyway. */

vPortEnableVFP(); // 开启向量浮点计算加速计算浮点数

/* Lazy save always. */

*( portFPCCR ) |= portASPEN_AND_LSPEN_BITS;

/* Start the first task. */

prvPortStartFirstTask(); // 开始调度第一个任务

/* Should never get here as the tasks will now be executing! Call the task

* exit error function to prevent compiler warnings about a static function

* not being called in the case that the application writer overrides this

* functionality by defining configTASK_RETURN_ADDRESS. Call

* vTaskSwitchContext() so link time optimisation does not remove the

* symbol. */

vTaskSwitchContext(); // 切换任务上下文

prvTaskExitError(); // 退出任务错误检查

/* Should not get here! */

return 0;

}

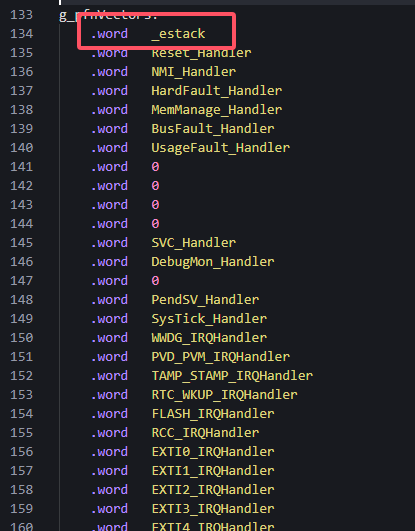

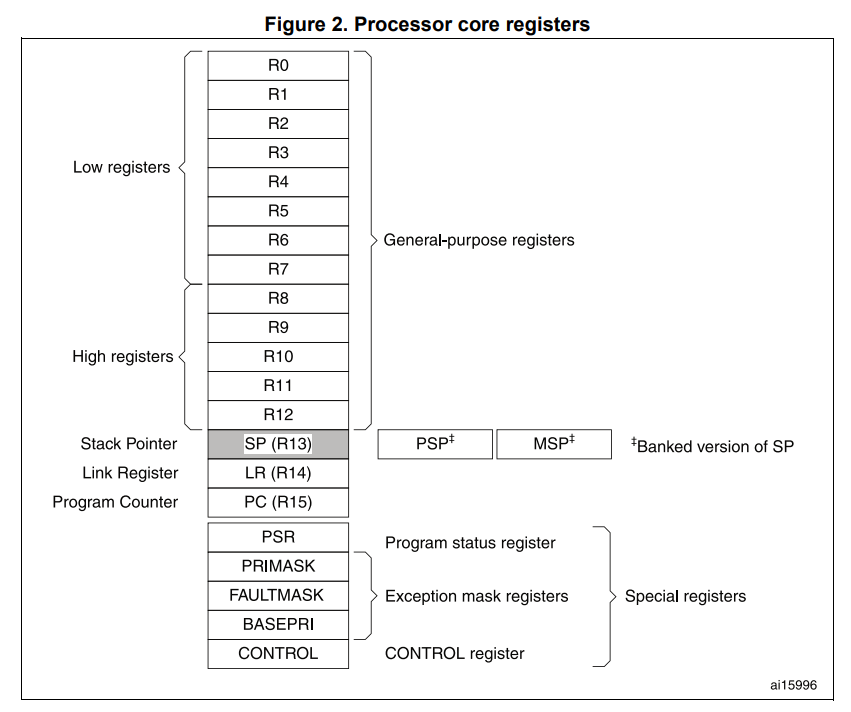

prvPortStartFirstTask调度第一个任务

- 从VTOR寄存器获取到NVIC中断向量表的偏移地址

- 读取NVIC向量表中的第一个元素作为程序的栈,设置MSP的值为栈顶地址

- 开启特权模式并且使用MSP作为栈

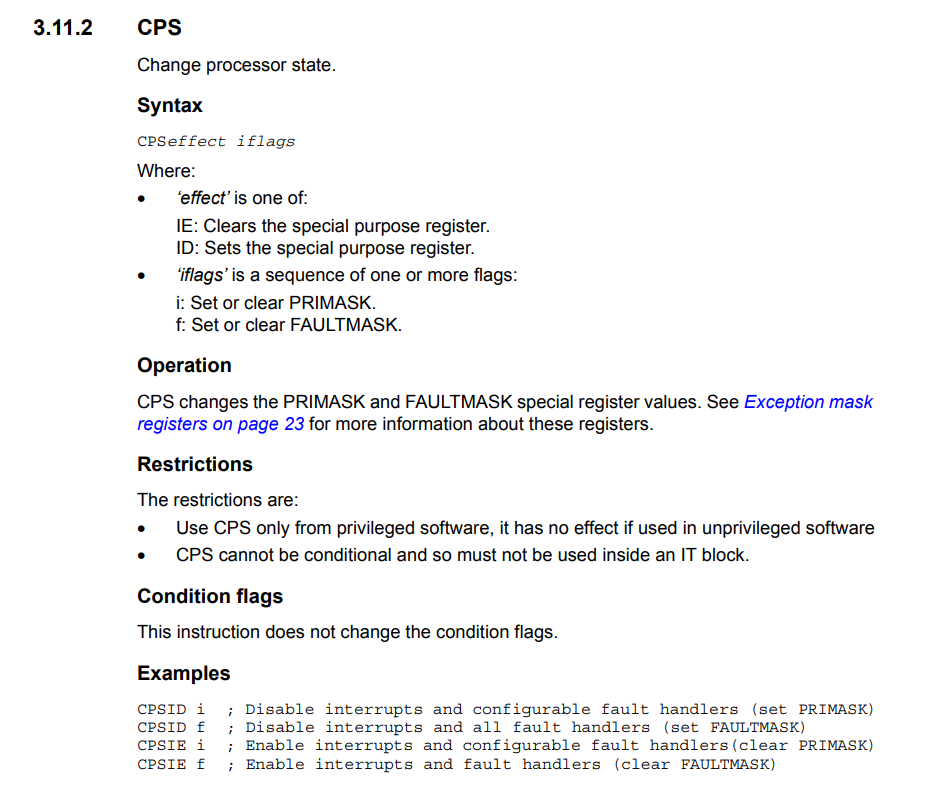

- 开启所有中断

- 触发系统调用,执行SVCHandler

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52static void prvPortStartFirstTask( void )

{

/* Start the first task. This also clears the bit that indicates the FPU is

* in use in case the FPU was used before the scheduler was started - which

* would otherwise result in the unnecessary leaving of space in the SVC stack

* for lazy saving of FPU registers. */

__asm volatile (

" ldr r0, =0xE000ED08 \n" /* Use the NVIC offset register to locate the stack. */ // 将指向NVIC中断向量表的偏移地址的寄存器地址VTOR读取到r0

" ldr r0, [r0] \n" // 读取NVIC实际存放的地址到r0

" ldr r0, [r0] \n" // 读取NVIC向量表的第一个元素到r0,其第一个元素存放的是栈地址_estack

" msr msp, r0 \n" /* Set the msp back to the start of the stack. */ // 设置主栈指针(main statck point)

" mov r0, #0 \n" /* Clear the bit that indicates the FPU is in use, see comment above. */ // 将立即数0放到r0

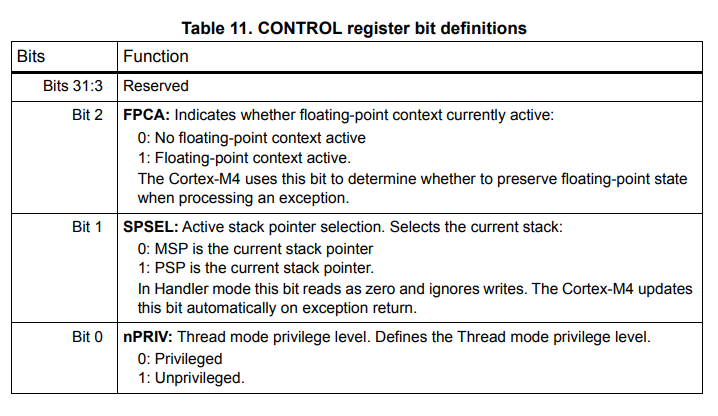

" msr control, r0 \n" /* 设置control寄存器,其存放了特权标志位和主栈和进程栈选择标志位,bit0:

0: 特权模式

1:非特权模式

bit1:

0: 使用MSP作为当前使用的栈

1: 使用PSP作为当前使用的栈

这里都是0即为特权模式使用MSP

*/

" cpsie i \n" /* Globally enable interrupts. */ // 启用全局中断

" cpsie f \n"

" dsb \n" // 同步数据

" isb \n" // 同步指令

" svc 0 \n" /* System call to start first task. */ // 系统调用触发SVC Handler

" nop \n"

" .ltorg \n"

);

}

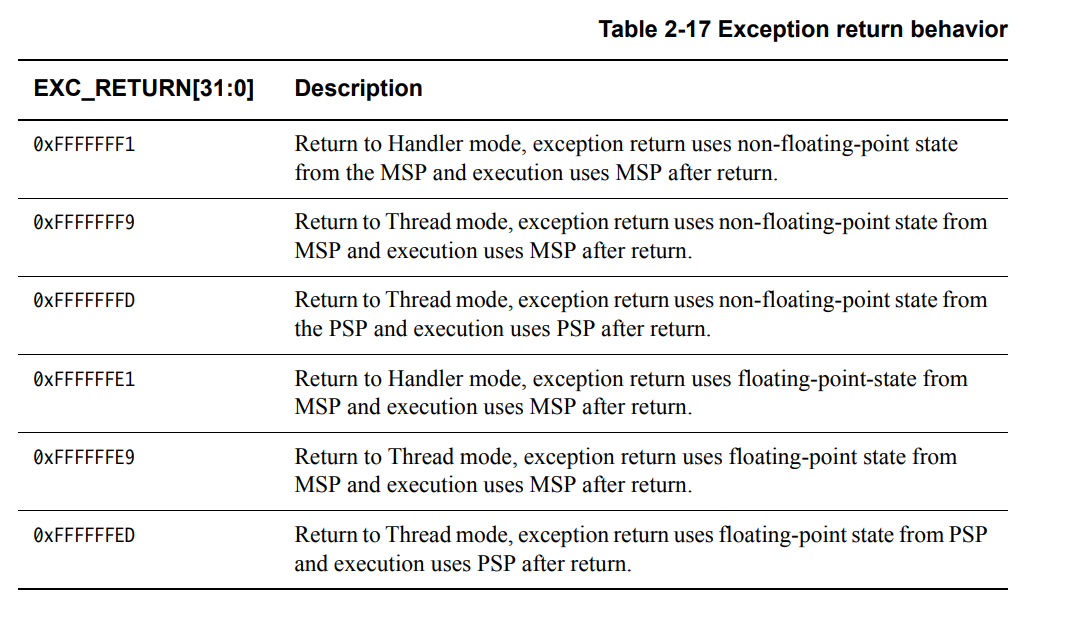

vPortSVCHandler系统调用

- 在调用

svc 0后会触发此中断,用于调度第一个任务 - 通过将堆栈的数据恢复到寄存器并使用PSP作为栈使用

- 通过调用

bx r14从当前中断Hanlder返回继续从PC(Program Counter)执行指令 - 当退出Handler时硬件从PSP恢复R0-R3, R12, LR, PC, xPSR

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31void vPortSVCHandler( void )

{

__asm volatile (

" ldr r3, =pxCurrentTCB \n" /* Restore the context. */ // 指向当前任务TCB指针的指针 (=pxCurrentTCB = &pxCurrentTCB)

" ldr r1, [r3] \n" /* Get the pxCurrentTCB address. */ // 获取当前任务TCB的指针

" ldr r0, [r1] \n" /* The first item in pxCurrentTCB is the task top of stack. */ // TCB结构体第一个元素,即为栈顶地址

" ldmia r0!, {r4-r11, r14} \n" /* Pop the registers that are not automatically saved on exception entry and the critical nesting count. */ // 从任务栈还原所有寄存器的数据,第一次执行任务时任务栈是由初始化时模拟的数据

" msr psp, r0 \n" /* Restore the task stack pointer. */ // 使用psp作为栈,并且设置栈顶

" isb \n" // 同步指令

" mov r0, #0 \n" // 立即数放到r0

" msr basepri, r0 \n" // 写入屏蔽寄存器,当中断优先级大于或等于basepri的中断都会被屏蔽,但如果为0则取消所用中断屏蔽

" bx r14 \n" // 由于r14(LR)在创建任务时写入了EXC_TRTURN值为0xFFFFFFFD,即返回线程模式并使用PSP作为栈

" \n"

" .ltorg \n"

);

}

vTaskSwitchContext切换任务上下文

- 调度器是否处于暂停调度的状态,如没有则等待处理完成

- 调度器工作,允许调度

- 检查栈是否溢出

- 选择优先级高的任务替换当前的TCB

- 执行任务切换钩子函数,用于追溯调试

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367void vTaskSwitchContext( void )

{

traceENTER_vTaskSwitchContext();

if( uxSchedulerSuspended != ( UBaseType_t ) 0U )

{

/* The scheduler is currently suspended - do not allow a context

* switch. */

xYieldPendings[ 0 ] = pdTRUE; // 为True说明还有待处理的没处理

}

else

{

xYieldPendings[ 0 ] = pdFALSE; // 没有待处理,允许调度

traceTASK_SWITCHED_OUT();

{

portALT_GET_RUN_TIME_COUNTER_VALUE( ulTotalRunTime[ 0 ] );

ulTotalRunTime[ 0 ] = portGET_RUN_TIME_COUNTER_VALUE();

/* Add the amount of time the task has been running to the

* accumulated time so far. The time the task started running was

* stored in ulTaskSwitchedInTime. Note that there is no overflow

* protection here so count values are only valid until the timer

* overflows. The guard against negative values is to protect

* against suspect run time stat counter implementations - which

* are provided by the application, not the kernel. */

if( ulTotalRunTime[ 0 ] > ulTaskSwitchedInTime[ 0 ] )

{

pxCurrentTCB->ulRunTimeCounter += ( ulTotalRunTime[ 0 ] - ulTaskSwitchedInTime[ 0 ] );

}

else

{

mtCOVERAGE_TEST_MARKER();

}

ulTaskSwitchedInTime[ 0 ] = ulTotalRunTime[ 0 ];

}

/* Check for stack overflow, if configured. */

taskCHECK_FOR_STACK_OVERFLOW(); // 检查栈溢出

/* Before the currently running task is switched out, save its errno. */

{

pxCurrentTCB->iTaskErrno = FreeRTOS_errno;

}

/* Select a new task to run using either the generic C or port

* optimised asm code. */

/* MISRA Ref 11.5.3 [Void pointer assignment] */

/* More details at: https://github.com/FreeRTOS/FreeRTOS-Kernel/blob/main/MISRA.md#rule-115 */

/* coverity[misra_c_2012_rule_11_5_violation] */

taskSELECT_HIGHEST_PRIORITY_TASK(); // 选择优先级最高的任务

traceTASK_SWITCHED_IN();

/* Macro to inject port specific behaviour immediately after

* switching tasks, such as setting an end of stack watchpoint

* or reconfiguring the MPU. */

portTASK_SWITCH_HOOK( pxCurrentTCB ); // 任务切换钩子函数,用于溯源

/* After the new task is switched in, update the global errno. */

{

FreeRTOS_errno = pxCurrentTCB->iTaskErrno;

}

{

/* Switch C-Runtime's TLS Block to point to the TLS

* Block specific to this task. */

configSET_TLS_BLOCK( pxCurrentTCB->xTLSBlock );

}

}

traceRETURN_vTaskSwitchContext();

}

void vTaskSwitchContext( BaseType_t xCoreID )

{

traceENTER_vTaskSwitchContext();

/* Acquire both locks:

* - The ISR lock protects the ready list from simultaneous access by

* both other ISRs and tasks.

* - We also take the task lock to pause here in case another core has

* suspended the scheduler. We don't want to simply set xYieldPending

* and move on if another core suspended the scheduler. We should only

* do that if the current core has suspended the scheduler. */

portGET_TASK_LOCK(); /* Must always acquire the task lock first. */

portGET_ISR_LOCK();

{

/* vTaskSwitchContext() must never be called from within a critical section.

* This is not necessarily true for single core FreeRTOS, but it is for this

* SMP port. */

configASSERT( portGET_CRITICAL_NESTING_COUNT() == 0 );

if( uxSchedulerSuspended != ( UBaseType_t ) 0U )

{

/* The scheduler is currently suspended - do not allow a context

* switch. */

xYieldPendings[ xCoreID ] = pdTRUE;

}

else

{

xYieldPendings[ xCoreID ] = pdFALSE;

traceTASK_SWITCHED_OUT();

{

portALT_GET_RUN_TIME_COUNTER_VALUE( ulTotalRunTime[ xCoreID ] );

ulTotalRunTime[ xCoreID ] = portGET_RUN_TIME_COUNTER_VALUE();

/* Add the amount of time the task has been running to the

* accumulated time so far. The time the task started running was

* stored in ulTaskSwitchedInTime. Note that there is no overflow

* protection here so count values are only valid until the timer

* overflows. The guard against negative values is to protect

* against suspect run time stat counter implementations - which

* are provided by the application, not the kernel. */

if( ulTotalRunTime[ xCoreID ] > ulTaskSwitchedInTime[ xCoreID ] )

{

pxCurrentTCBs[ xCoreID ]->ulRunTimeCounter += ( ulTotalRunTime[ xCoreID ] - ulTaskSwitchedInTime[ xCoreID ] );

}

else

{

mtCOVERAGE_TEST_MARKER();

}

ulTaskSwitchedInTime[ xCoreID ] = ulTotalRunTime[ xCoreID ];

}

/* Check for stack overflow, if configured. */

taskCHECK_FOR_STACK_OVERFLOW();

/* Before the currently running task is switched out, save its errno. */

{

pxCurrentTCBs[ xCoreID ]->iTaskErrno = FreeRTOS_errno;

}

/* Select a new task to run. */

taskSELECT_HIGHEST_PRIORITY_TASK( xCoreID );

traceTASK_SWITCHED_IN();

/* Macro to inject port specific behaviour immediately after

* switching tasks, such as setting an end of stack watchpoint

* or reconfiguring the MPU. */

portTASK_SWITCH_HOOK( pxCurrentTCBs[ portGET_CORE_ID() ] );

/* After the new task is switched in, update the global errno. */

{

FreeRTOS_errno = pxCurrentTCBs[ xCoreID ]->iTaskErrno;

}

{

/* Switch C-Runtime's TLS Block to point to the TLS

* Block specific to this task. */

configSET_TLS_BLOCK( pxCurrentTCBs[ xCoreID ]->xTLSBlock );

}

}

}

portRELEASE_ISR_LOCK();

portRELEASE_TASK_LOCK();

traceRETURN_vTaskSwitchContext();

}

taskSELECT_HIGHEST_PRIORITY_TASK选择优先级最高的任务

- 当前任务若让出CPU资源,触发调度通过当前任务的优先级一次往低优先级判断是否存在任务,若存在则执行,若不存在继续往下直至0

- 若次优先级有任务则

pxCurrentTCB设置为新任务 - 通过宏定义可以获取当前优先级下的链表,其链表是循环双链表这可以让每次获取的元素都不一样

- 重写当前最高优先级为新任务的优先级

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

do { \

UBaseType_t uxTopPriority = uxTopReadyPriority; // 当前执行的任务优先级 \

\

/* Find the highest priority queue that contains ready tasks. */ \

// 遍历就绪列表,若对应的链表为空则说明让出了CPU可以执行次低的任务

while( listLIST_IS_EMPTY( &( pxReadyTasksLists[ uxTopPriority ] ) ) != pdFALSE ) \

{ \

configASSERT( uxTopPriority ); \

--uxTopPriority; \

} \

\

/* listGET_OWNER_OF_NEXT_ENTRY indexes through the list, so the tasks of \

* the same priority get an equal share of the processor time. */ \

// 得到此优先级的下一个任务,任务是采用循环双链表的方式挂载任务实现了同级任务的切换执行

listGET_OWNER_OF_NEXT_ENTRY( pxCurrentTCB, &( pxReadyTasksLists[ uxTopPriority ] ) ); \

uxTopReadyPriority = uxTopPriority; // 重写当前任务的最高优先级 \

} while( 0 ) /* taskSELECT_HIGHEST_PRIORITY_TASK */

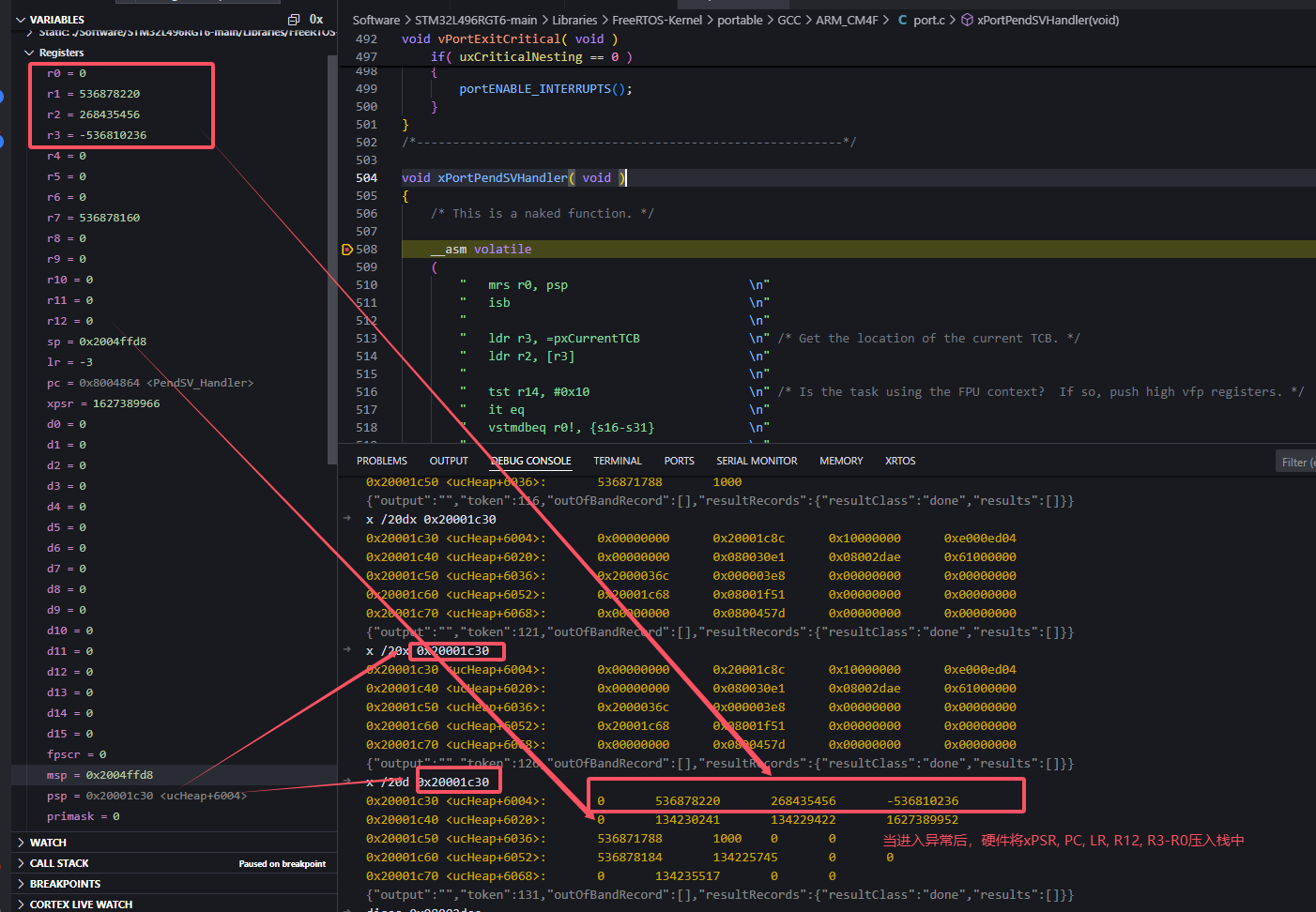

xPortPendSVHandler任务调度核心

- 此函数和

vPortSVCHandler有点类似,但此函数用于两个任务之间的调度,而vPortSVCHandler只有在首次任务调度才会被执行 - 进入此函数之前,硬件将xPSR, PC, LR, R12, R3-R0自动保存到了PSP中,如下图所示

- 手动保存剩余的寄存器和VFP寄存器到任务栈中

- 屏蔽所有中断,防止切换任务时突来中断导致系统紊乱

- 将R0,R3临时入栈SP中,防止调用切换上下文函数后此值被修改

- 切换任务上下文,修改当前任务TCB

- 取消中断屏蔽,从栈还原R0和R3寄存器

- 从异常返回程序

- 退出PendSV后,硬件从PSP恢复R0-R3, R12, LR, PC, xPSR

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107void xPortPendSVHandler( void )

{

/* This is a naked function. */

// 进入此函数之前,硬件将xPSR, PC, LR, R12, R3-R0自动保存到了PSP中,如下图所示

__asm volatile

(

" mrs r0, psp \n" // 保存当前任务的进程栈指针

" isb \n"

" \n"

" ldr r3, =pxCurrentTCB \n" /* Get the location of the current TCB. */

" ldr r2, [r3] \n" // 获取指向当前任务栈顶指针的指针

" \n"

" tst r14, #0x10 \n" /* Is the task using the FPU context? If so, push high vfp registers. */ // 任务使用了FPU,保存上下文优先压入栈

" it eq \n"

" vstmdbeq r0!, {s16-s31} \n" // 将VFP寄存器压入栈

" \n"

" stmdb r0!, {r4-r11, r14} \n" /* Save the core registers. */

// 将通用寄存器压入栈

" str r0, [r2] \n" /* Save the new top of stack into the first member of the TCB. */ // 保存新栈顶指针到TCB

" \n"

" stmdb sp!, {r0, r3} \n" // 调用函数可能会影响寄存器的值,优先将r0, r3寄存器压入栈

" mov r0, %0 \n" // configMAX_SYSCALL_INTERRUPT_PRIORITY赋值给r0

" msr basepri, r0 \n" // 写入中断屏蔽寄存器,屏蔽中断防止切换任务发生中断导致系统紊乱

" dsb \n"

" isb \n"

" bl vTaskSwitchContext \n" // 切换任务上下文

" mov r0, #0 \n"

" msr basepri, r0 \n" // 写0取消中断屏蔽

" ldmia sp!, {r0, r3} \n" // 函数调用完后恢复r0, r3

" \n"

" ldr r1, [r3] \n" /* The first item in pxCurrentTCB is the task top of stack. */ // 此时TCB已经被`vTaskSwitchContext`改变了,变成了新的任务的TCB了

" ldr r0, [r1] \n" // 获取栈顶地址

" \n"

" ldmia r0!, {r4-r11, r14} \n" /* Pop the core registers. */ // 还原通用寄存器r4-r14

" \n"

" tst r14, #0x10 \n" /* Is the task using the FPU context? If so, pop the high vfp registers too. */

" it eq \n"

" vldmiaeq r0!, {s16-s31} \n" // 开启了FPU需要还原VFP寄存器

" \n"

" msr psp, r0 \n" // 设置r0的值为PSP栈顶

" isb \n"

" \n"

#ifdef WORKAROUND_PMU_CM001 /* XMC4000 specific errata workaround. */

#if WORKAROUND_PMU_CM001 == 1

" push { r14 } \n"

" pop { pc } \n"

#endif

#endif

" \n"

" bx r14 \n" // 从异常返回程序,同上

" \n"

" .ltorg \n"

::"i" ( configMAX_SYSCALL_INTERRUPT_PRIORITY )

// 上诉代码执行完成后,当退出PendSV时,硬件从PSP恢复R0-R3, R12, LR, PC, xPSR

);

}

参考文献

《Cortex-M4 Devices Generic User Guid》

pm0214-stm32-cortexm4-mcus-and-mpus-programming-manual

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来源 Jamie793’ S Blog!

评论